Before proceeding with the segmentation process, the variation in the brightness level of the 3D objects were dealt with first. Brightness information can be disregarded if the RGB values are normalized to the intensity values at each point. That is, the RGB values at a point are divided by the sum of the of the RGB values at that point. This color space, which contains only chromaticity/color information, is called the normalized chromaticity coordinates or NCC. Figure 1 shows the NCC where the x-axis is r and the y-axis is g. b need not be shown here because its value can already be derived from r and g.

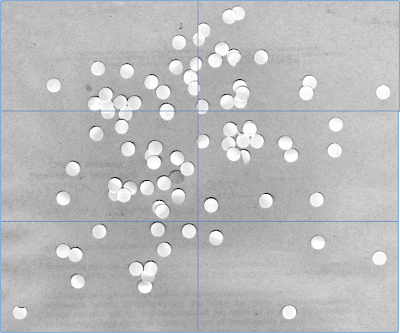

In nonparametric segmentation, no form of PDF is assumed and the 2D histogram of the binned rg values is used. The histogram is a N x N matrix where N is the number of bins. A sample code of creating a 2D histogram is shown in the manual. The process of segmentation is done by histogram backprojection. That is the pixel locations are assigned with new values depending on the r and g values. The new value is the value in the 2D histogram of the (r*N, g*N) location. Bright spots correspond to the portions of the image with the same color information as the ROI.

Figure 1. Resulting images after nonparametric and parametric segmentation of the patches of the sample images (third and last column). The patches are the small images just above the sample images. The second column consists of the 2D histogram of the patches.

The created 2D histograms were checked by comparing the bright portions in the histogram with the NCC plot above. It can be seen that the bright portions are located at the same position as the location in the NCC plot of the color of the patch.

Comparing the results of parametric and nonparametric segmentation, it can be seen that the former is a better technique of highlighting portions that have color more or less the same as the patch. The trace of the fruits in the segmented image is more solid when the former technique is used as compared to when the latter technique is used. This is probably because the Gaussian distribution generates higher probability than when using the 2D histogram of the patch. However, it must be noted that the number of bins used for the parametric segmentation above is 256. This means the colors/shades of colors are highly separated, and so fewer portions in the image will be detected having the same color as the patch. This explains why in the results above the parametric method has darker and fewer highlighted spots than the parametric method. Figure 2 shows the different results when different number of bins is used. It can be seen that more fruits are highlighted when the bin is just 10. The trace is even more solid than the trace using parametric method. This is because with smaller bins, more colors of the same shade are grouped. From the results below, it can be seen that using 100 bins gives us the best result for highlighting only the fruits that have exactly the same color as the patch.

Figure 2. Parametric segmentation of the banana and grape patches using different bins (10, 100, 256).

Figure 2. Parametric segmentation of the banana and grape patches using different bins (10, 100, 256).2. Mango: http://carinderia.net/blog/wp-content/uploads/2008/12/mango13.jpg

3. Green Apple: http://2.bp.blogspot.com/_wxeBei5m--0/SeaZnk8DBfI/AAAAAAAAARE/uci2eKrBTtU/s400/apple_green_fruit_240421_l.jpg

4. Red Apple: http://www.ableweb.org/news/winter2009/images/fruitApple1c4.jpg

5. Fruits: http://files.myopera.com/buksiy/albums/739313/Fruits.jpg

6. Apple Tree: http://www.kevinecotter.com/appletree.jpg